ACM SIGGRAPH Workshop: Truth in Images, Videos, and Graphics

Organizers: Irfan Essa, Chris Bregler, Hany Farid

Purpose

One of the goals of computer graphics is to create images, scenes, and videos that appear real and indistinguishable from live-captured content. This goal is now quite achievable as images and videos can be synthesized with a level of realism such that we can’t tell if the content shown to us is just live-captured content, or some mixture of live content, with added manipulations and edits, or completely synthetic. While the ability to create such synthetic or hybrid content is a much-needed tool for entertainment and story-telling, it can also be used to distort the truth. Recently, we have witnessed a significant increase in both the number and success of manipulations in media. Modern graphics techniques are creating challenges for journalistic processes as truth can be easily manipulated and then shared widely. Tools from computer graphics and multimedia can now create images and videos that are indistinguishable from the real and are therefore very effective at manipulating the beliefs of consumers.

The goal of this inaugural workshop is to bring together researchers and practitioners in all aspects of media creation to understand the challenges as tools for manipulation are made available widely. We will discuss the tools and the issues around how these technologies impact society, and reflect on the responsibilities of both the technology creators and users of these technologies.

The format of this workshop will include invited speakers to set the stage for this conversation.

Topics

- Videos of real people saying something they never said.

- http://grail.cs.washington.edu/projects/AudioToObama/

- Detecting of Manipulation.

- https://arxiv.org/abs/1805.04953

- https://arxiv.org/pdf/1805.04096.pdf

- Staging is manipulation

- https://petapixel.com/2012/10/01/famous-valley-of-the-shadow-of-death-photo-was-most-likely-staged/

- https://www.nytimes.com/2011/09/04/books/review/believing-is-seeing-by-errol-morris-book-review.html

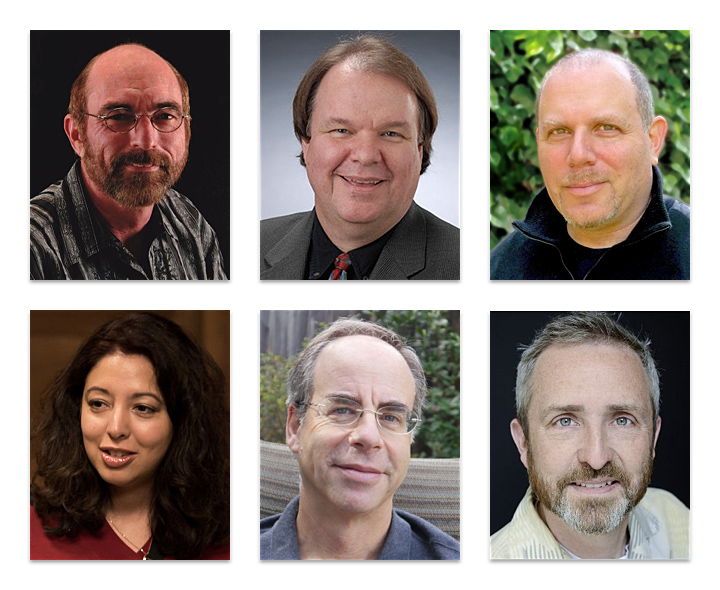

Speakers

Chris Bregler

Chris Bregler currently works at Google. He was on the faculty at New York University and Stanford University and has worked for several companies including Hewlett Packard, Interval, Disney Feature Animation, LucasFilm's ILM, Facebook's Oculus, and the New York Times. He received his M.S. and Ph.D. in Computer Science from U.C. Berkeley and his Diplom from Karlsruhe University. In 2016 he received an Academy Award in the Oscar's Science and Technology category. He has been named Stanford Joyce Faculty Fellow, Terman Fellow, and Sloan Research Fellow. He received the Olympus Prize for achievements in computer vision and pattern recognition and was awarded the IEEE Longuet-Higgins Prize for "Fundamental Contributions in Computer Vision that have withstood the test of time". His work has resulted in numerous awards from the National Science Foundation, Sloan Foundation, Packard Foundation, Electronic Arts, Microsoft, Google, U.S. Navy, U.S. Airforce, N.S.A, C.I.A. and other sources.He's been the executive producer of Squidball.net, which required building the world's largest real-time motion capture volume, and a massive multi-player motion game holding several world records in The Motion Capture Society. He has been active in the visual effects industry, for example, as the lead developer of ILM's Multitrack system that has been used in many feature film productions, including Avatar, Avengers, Noah, Star Trek, and Star Wars.

Alyosha Efros

Alexei (Alyosha) Efros joined UC Berkeley in 2013. Prior to that, he was nine years on the faculty of Carnegie Mellon University, and has also been affiliated with École Normale Supérieure/INRIA and University of Oxford. His research is in the area of computer vision and computer graphics, especially at the intersection of the two. He is particularly interested in using data-driven techniques to tackle problems where large quantities of unlabeled visual data are readily available. Efros received his PhD in 2003 from UC Berkeley. He is a recipient of CVPR Best Paper Award (2006), NSF CAREER award (2006), Sloan Fellowship (2008), Guggenheim Fellowship (2008), Okawa Grant (2008), Finmeccanica Career Development Chair (2010), SIGGRAPH Significant New Researcher Award (2010), ECCV Best Paper Honorable Mention (2010), 3 Helmholtz Test-of-Time Prizes (1999,2003,2005), and the ACM Prize in Computing (2016).

Webpage: https://www2.eecs.berkeley.edu/Faculty/Homepages/efros.html

Irfan Essa

Irfan Essa is a Distinguished Professor of Computing at Georgia Institute of Technology (GA Tech), in Atlanta, Georgia, USA and a Research Scientist at Google in Mountain View, CA, USA. At GA Tech, He is in the School of Interactive Computing (iC) and an Associate Dean of Research in the College of Computing (CoC) and serves as the Inaugural Director of the new Interdisciplinary Research Center for Machine Learning at Georgia Tech (ML@GT). Essa works in the areas of Computer Vision, Machine Learning, Computer Graphics, Computation Perception, Robotics, Computer Animation, and Social Computing, with potential impact on Autonomous Systems, Video Analysis, and Production (e.g., Computational Photography & Video, Image-based Modeling and Rendering, etc.) Human Computer Interaction, Artificial Intelligence, Computational Behavioral/Social Sciences, and Computational Journalism research. He has published over 150 scholarly articles in leading journals and conference venues on these topics and several of his papers have also won best paper awards. He has been awarded the NSF CAREER and was elected to the grade of IEEE Fellow. He has held extended research consulting positions with Disney Research and Google Research and also was an Adjunct Faculty Member at Carnegie Mellon’s Robotics Institute. He joined GA Tech Faculty in 1996 after his earning his MS (1990), Ph.D. (1994), and holding research faculty position at the Massachusetts Institute of Technology (Media Lab) [1988-1996].

Webpage: www.irfanessa.com / Twitter: @irrfaan

Hany Farid

Hany Farid has been serving as the Albert Bradley 1915 Third Century Professor and Chair of Computer Science at Dartmouth until 2017. After a sabbatical in 2018-2019, he is joining the faculty of Computer Science at University of California at Berkeley in 2019, Farid’s research focuses on digital forensics, image analysis, and human perception. He received my undergraduate degree in Computer Science and Applied Mathematics from the University of Rochester in 1989, an M.S. in Computer Science from SUNY Albany, and a Ph.D. in Computer Science from the University of Pennsylvania in 1997. Following a two-year post-doctoral fellowship in Brain and Cognitive Sciences at MIT, he joined the faculty at Dartmouth in 1999. He is the recipient of an Alfred P. Sloan Fellowship, a John Simon Guggenheim Fellowship, and he is a Fellow of the IEEE and National Academy of Inventors. He is also the Chief Technology Officer and co-founder of Fourandsix Technologies and a Senior Adviser to the Counter Extremism Project.

Hany Farid has been serving as the Albert Bradley 1915 Third Century Professor and Chair of Computer Science at Dartmouth until 2017. After a sabbatical in 2018-2019, he is joining the faculty of Computer Science at University of California at Berkeley in 2019, Farid’s research focuses on digital forensics, image analysis, and human perception. He received my undergraduate degree in Computer Science and Applied Mathematics from the University of Rochester in 1989, an M.S. in Computer Science from SUNY Albany, and a Ph.D. in Computer Science from the University of Pennsylvania in 1997. Following a two-year post-doctoral fellowship in Brain and Cognitive Sciences at MIT, he joined the faculty at Dartmouth in 1999. He is the recipient of an Alfred P. Sloan Fellowship, a John Simon Guggenheim Fellowship, and he is a Fellow of the IEEE and National Academy of Inventors. He is also the Chief Technology Officer and co-founder of Fourandsix Technologies and a Senior Adviser to the Counter Extremism Project.

Webpage: http://www.cs.dartmouth.edu/farid/

Ira Kemelmacher-Shlizerman

Ira Kemelmacher-Shlizerman is a Scientist and Entrepreneur. Ira's interests are in the intersection of computer vision, computer graphics and learning. A major part of her work is to invent virtual and augmented reality experiences to empower people in their day to day activities, and develop algorithms for modeling people from unconstrained photos, videos, audio and language. Dr. Kemelmacher-Shlizerman is an Assistant Professor in the Allen School at the University of Washington.

Founder and Co-Director of the UW Reality Lab, and Research Scientist at Facebook. She founded a startup Dreambit that was acquired by Facebook Inc. in 2016, and Tech Transfered product Face Movies to Google Inc. in 2011. Ira received her Ph.D in computer science and applied mathematics at the Weizmann Institute of Science. Her works were awarded the Google faculty award, Madrona prize, the Innovation of the Year Award, 2016, selected to the covers of CACM and SIGGRAPH, and frequently covered by most national and international media. She has been serving as area chair and technical committee of both CVPR and SIGGRAPH, and part of Expert Network, LDV capital.

Webpate: https://homes.cs.washington.edu/~kemelmi/

Hao Li

Hao Li is CEO/Co-Founder of Pinscreen, assistant professor of Computer Science at the University of Southern California, and the director of the Vision and Graphics Lab at the USC Institute for Creative Technologies. Hao's work in Computer Graphics and Computer Vision focuses on digitizing humans and capturing their performances for immersive communication and telepresence in virtual worlds. His research involves the development of novel geometry processing, data-driven, and deep learning algorithms.

He is known for his seminal work in non rigid shape alignment, real-time facial performance capture, hair digitization, and dynamic full body capture. He was previously a visiting professor at Weta Digital, a research lead at Industrial Light & Magic / Lucasfilm, and a postdoctoral fellow at Columbia and Princeton Universities. He was named top 35 innovator under 35 by MIT Technology Review in 2013 and was also awarded the Google Faculty Award, the Okawa Foundation Research Grant, as well as the Andrew and Erna Viterbi Early Career Chair. He won the Office of Naval Research (ONR) Young Investigator Award in 2018. Hao obtained his PhD at ETH Zurich and his MSc at the University of Karlsruhe (TH).

Webpage: http://www.hao-li.com/

Logistics

A $40 registration fee is required by 9am Pacific Time on Tuesday Aug 7 to attend lunch. Unregistered attendees may participate if space allows, but lunch will not be provided.

Applications will be accepted on first come/first served basis until August 7th, 9AM PDT. Apply to participate!