At one time, the most common scene that a persistence of vision (POV) display was associated with was, perhaps, in the rear window of a vehicle – for fun, rather than function. These displays rely on the “afterimage” that appears to persist in one’s vision; almost without exception, the design must include a rapidly oscillating or rotating linear array of LEDs, so as to take advantage of this property of the human eye and brain. For the most part, however, few individuals have ever thought to introduce the element of interactivity to these interesting “light-shows”. A project called JANUS is being developed by the Design Media and HCI labs of KAIST in Daejon, South Korea, and aims to expand upon both the functionality and practicality of these devices.

JANUS, which was exhibited in the Emerging Technologies program at SIGGRAPH 2014, not only solves an intrinsic problem with the design of POV displays, but also adds properties not commonly associated with them. For one, the fact that most of these displays only feature a single LED array facing towards one viewer is potentially problematic for anyone viewing the image from the other side – it will appear to be inverted. For this reason, KAIST’s project includes a secondary arm on the opposing side of the device, which allows for two viewers to observe either the same image from a correct perspective, or two entirely different images altogether – this could be a nice solution for the common difficulty that arises from any permutation of transparent display devices. In addition, the “blades” of the POV display in question contain ninety-six separate tri-color LEDs, so as to produce full-color images for both viewers.

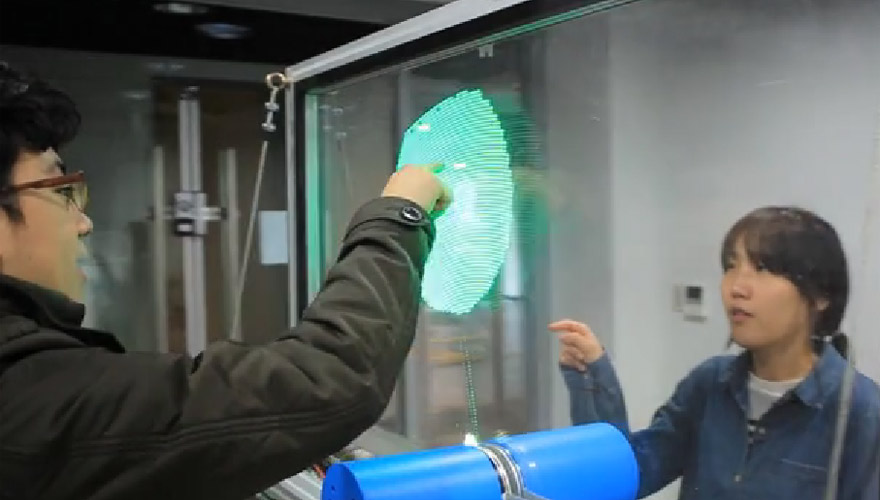

The project’s teams did not stop there, however; a more interesting problem, perhaps, is that of interacting with these moving displays. Obviously, one could not just “touch” the image directly on the screen with the way that most implementations of the display already exist, at risk of injury (this project’s blades rotate at 1,000 RPM!). JANUS solves the problem of direct interaction by placing two sheets of plexiglass between the observer and the blade, and for purposes of manipulation, NEXIO ATI0500 infrared touch sensor frames. By utilizing this combination of various technologies, a new development emerges – one might even go so far as to say that it is “holographic” in nature, though JANUS does not necessarily adhere to that definition.

Control interfaces included in the design of JANUS include only two separate components: a computer to receive the input from the touch sensor frames, and a Raspberry Pi to handle the image data. It is a fittingly simple scenario for a relatively simple – but innovative – device. According to the project specifications, the “blade” is currently set to display a single line of image data for every one degree of rotation that it traverses.

Although it is not a perfect solution to our desire for fully holographic displays, this advanced implementation of the age-old POV display is certainly a unique concept, and one can come up with plenty of interesting ideas to take advantage of it – the KAIST teams have experimented with image manipulation, particularly as it relates to an interaction between a user on one side of the screen to the other side; for instance, a variation on the universally recognized Snake was created to demonstrate the possibilities. From the SIGGRAPH 2014 Emerging Technologies paper, JANUS: “A player can control a snake and let it go through a hole [perceived in the screen]. A snake appears on the other side and the other player has to finish his/her mission to get the snake back to the previous side.”

It is easy to expand upon this unique idea of player-to-player interaction, and additional examples were provided of other concepts; clearly, there is reason to be interested in what JANUS leads to in time. These are the kinds of projects that may lead us to the future of display technologies.