ARForce

![]() Full Conference

Full Conference![]() One-Day Full Conference

One-Day Full Conference![]() Basic Conference/Exhibits Plus

Basic Conference/Exhibits Plus

|

|

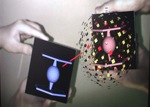

A novel marker-based system for augmented reality that measures not only the 3D positions and IDs of markers, but also the distribution of force vectors applied to the markers. Users can observe overlaid virtual images and control them with their fingers. |

Enhanced Life

Many current marker-based AR systems can easily combine virtual imagery with the real world, but their user-input options are limited, and it is difficult for users to freely manipulate the virtual images without special electronic devices. Because ARForce detects the distribution of force vectors applied on the interface as well as their 3D positions and IDs, users can manipulate them by pinching and twisting in the three-dimensional space.

Goals

The overall goal is to develop a novel tangible interface for augmented reality.

Specifically, the goal is to provide a marker-based interface that allows users to observe overlaid virtual images on the real world and manipulate the images with their fingers.

Innovations

The core innovation is an interface design that detects 3D positions, IDs, and force input at the same time without special electronic devices installed on the interface. The sensing process:

1. The system detects its 3D position and the ID of each interface through position markers attached on the interface. The square-detection method of ARToolKit tracks positions, and an original pattern-matching method detects IDs.

2. To detect the force-vector distribution, the system tracks the movement of force markers embedded inside the interface. Infrared filters make the markers invisible to users.

3. As users push, twist, or stretch the interface, the system generates virtual images in appropriate positions with auditory feedback.

Vision

In the near future, ARForce will be enhanced so that it can measure more detailed tactile information and control virtual objects as if they are real objects. Ultimately, ARForce will provide a novel computer-human interface that supports more natural and intuitive input in everyday life.

Contributors

Kensei Jo

The University of Tokyo

Yasuaki Kakehi

Keio University/Japan Science and Technology Agency

Kouta Minamizawa

Hideaki Nii

Naoki Kawakami

Susumu Tachi

The University of Tokyo